To bridge this communications gap, our workforce at Mitsubishi Electric powered Exploration Laboratories has designed and constructed an AI process that does just that. We connect with the program scene-aware conversation, and we system to include things like it in autos.

As we generate down a street in downtown Los Angeles, our system’s synthesized voice supplies navigation recommendations. But it does not give the from time to time tough-to-abide by directions you’d get from an regular navigation method. Our program understands its surroundings and provides intuitive driving guidelines, the way a passenger sitting in the seat beside you may do. It may say, “Follow the black auto to change right” or “Turn still left at the developing with a billboard.” The process will also difficulty warnings, for instance: “Watch out for the oncoming bus in the reverse lane.”

To assistance improved automotive basic safety and autonomous driving, autos are staying outfitted with additional sensors than ever just before. Cameras, millimeter-wave radar, and ultrasonic sensors are applied for computerized cruise manage, emergency braking, lane trying to keep, and parking assistance. Cameras inside the automobile are getting applied to watch the health and fitness of drivers, also. But beyond the beeps that inform the driver to the presence of a vehicle in their blind spot or the vibrations of the steering wheel warning that the motor vehicle is drifting out of its lane, none of these sensors does a lot to change the driver’s conversation with the automobile.

Voice alerts provide a much much more adaptable way for the AI to assist the driver. Some modern scientific studies have demonstrated that spoken messages are the best way to express what the inform is about and are the preferable selection in small-urgency driving scenarios. And indeed, the vehicle marketplace is starting to embrace technologies that functions in the fashion of a virtual assistant. Certainly, some carmakers have announced designs to introduce conversational brokers that the two assist drivers with working their motor vehicles and support them to organize their day by day life.

https://www.youtube.com/look at?v=t0izXoT_Aoc

Scene-Conscious Conversation Technological know-how

www.youtube.com

The plan for setting up an intuitive navigation method based on an array of automotive sensors arrived up in 2012 for the duration of conversations with our colleagues at Mitsubishi Electric’s automotive business enterprise division in Sanda, Japan. We famous that when you’re sitting up coming to the driver, you don’t say, “Turn right in 20 meters.” In its place, you are going to say, “Turn at that Starbucks on the corner.” You could also warn the driver of a lane that is clogged up forward or of a bicycle that’s about to cross the car’s path. And if the driver misunderstands what you say, you will go on to clarify what you meant. Even though this approach to providing instructions or guidance will come obviously to people today, it is effectively past the abilities of today’s car-navigation techniques.

Even though we were being keen to build these kinds of an innovative car-navigation assist, lots of of the ingredient systems, which includes the eyesight and language elements, ended up not sufficiently mature. So we place the idea on hold, expecting to revisit it when the time was ripe. We experienced been studying numerous of the systems that would be wanted, like object detection and tracking, depth estimation, semantic scene labeling, eyesight-based localization, and speech processing. And these technologies ended up advancing fast, thanks to the deep-mastering revolution.

Soon, we created a system that was capable of viewing a online video and answering concerns about it. To start off, we wrote code that could analyze equally the audio and online video options of some thing posted on YouTube and create automated captioning for it. A single of the essential insights from this function was the appreciation that in some sections of a online video, the audio could be giving far more information than the visible capabilities, and vice versa in other pieces. Constructing on this research, associates of our lab organized the initially community challenge on scene-knowledgeable dialogue in 2018, with the goal of developing and evaluating devices that can properly response thoughts about a online video scene.

We ended up especially interested in being able to identify no matter if a automobile up in advance was pursuing the wanted route, so that our technique could say to the driver, “Follow that auto.”

We then made the decision it was at last time to revisit the sensor-based navigation notion. At very first we imagined the part systems had been up to it, but we shortly recognized that the ability of AI for good-grained reasoning about a scene was nonetheless not excellent more than enough to develop a significant dialogue.

Robust AI that can purpose usually is even now quite considerably off, but a reasonable degree of reasoning is now feasible, so extended as it is confined in just the context of a certain application. We desired to make a automobile-navigation system that would aid the driver by offering its possess choose on what is going on in and about the car or truck.

A single problem that immediately turned apparent was how to get the automobile to decide its place precisely. GPS sometimes was not fantastic adequate, specially in city canyons. It could not convey to us, for example, just how shut the vehicle was to an intersection and was even significantly less probably to give precise lane-amount information.

We thus turned to the identical mapping technology that supports experimental autonomous driving, wherever camera and lidar (laser radar) data help to identify the car on a a few-dimensional map. Luckily, Mitsubishi Electric powered has a cell mapping process that supplies the necessary centimeter-degree precision, and the lab was testing and marketing and advertising this platform in the Los Angeles spot. That method permitted us to accumulate all the details we required.

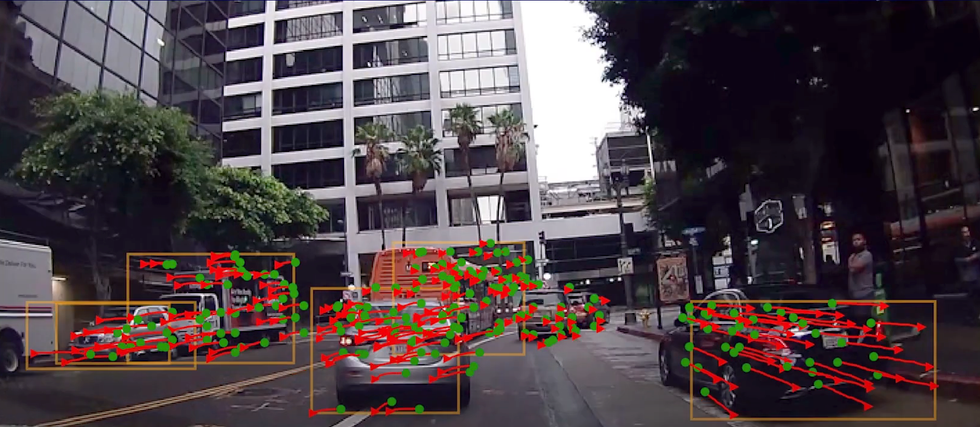

The navigation system judges the motion of cars, applying an array of vectors [arrows] whose orientation and length signify the way and velocity. Then the method conveys that data to the driver in plain language.Mitsubishi Electrical Exploration Laboratories

A crucial goal was to deliver steerage primarily based on landmarks. We realized how to practice deep-learning types to detect tens or hundreds of item courses in a scene, but finding the products to decide on which of all those objects to mention—”object saliency”—needed extra thought. We settled on a regression neural-network design that deemed item kind, measurement, depth, and length from the intersection, the object’s distinctness relative to other candidate objects, and the distinct route currently being regarded at the minute. For instance, if the driver wants to flip still left, it would probable be helpful to refer to an object on the still left that is effortless for the driver to recognize. “Follow the crimson truck that is turning still left,” the process could say. If it doesn’t find any salient objects, it can normally supply up distance-primarily based navigation guidance: “Turn remaining in 40 meters.”

We needed to avoid this sort of robotic converse as a lot as possible, nevertheless. Our answer was to develop a equipment-understanding network that graphs the relative depth and spatial spots of all the objects in the scene, then bases the language processing on this scene graph. This technique not only enables us to accomplish reasoning about the objects at a individual instant but also to capture how they’re altering around time.

This kind of dynamic examination assists the procedure comprehend the movement of pedestrians and other motor vehicles. We have been particularly intrigued in remaining ready to establish whether or not a car up in advance was next the wanted route, so that our method could say to the driver, “Follow that motor vehicle.” To a man or woman in a automobile in motion, most components of the scene will them selves show up to be relocating, which is why we desired a way to take away the static objects in the history. This is trickier than it appears: Just distinguishing a person car from an additional by shade is alone hard, supplied the improvements in illumination and the climate. That is why we anticipate to add other attributes in addition to shade, these types of as the make or model of a motor vehicle or probably a recognizable emblem, say, that of a U.S. Postal Provider truck.

Natural-language generation was the ultimate piece in the puzzle. Finally, our procedure could make the ideal instruction or warning in the form of a sentence applying a rules-based mostly tactic.

The car’s navigation program functions on best of a 3D representation of the road—here, numerous lanes bracketed by trees and condominium buildings. The representation is created by the fusion of details from radar, lidar, and other sensors.Mitsubishi Electric Research Laboratories

Procedures-dependent sentence technology can presently be seen in simplified type in personal computer games in which algorithms deliver situational messages based on what the activity player does. For driving, a big assortment of eventualities can be expected, and policies-based mostly sentence generation can hence be programmed in accordance with them. Of class, it is not possible to know just about every problem a driver may perhaps working experience. To bridge the gap, we will have to improve the system’s potential to respond to scenarios for which it has not been specially programmed, working with info collected in true time. Currently this job is quite hard. As the engineering matures, the stability in between the two types of navigation will lean further more towards information-driven observations.

For occasion, it would be comforting for the passenger to know that the explanation why the car or truck is instantly changing lanes is for the reason that it desires to stay clear of an obstacle on the highway or prevent a visitors jam up forward by obtaining off at the upcoming exit. Additionally, we anticipate organic-language interfaces to be useful when the car detects a scenario it has not viewed before, a problem that may well involve a superior stage of cognition. If, for occasion, the car strategies a road blocked by building, with no distinct path all over it, the automobile could ask the passenger for assistance. The passenger may possibly then say a thing like, “It seems attainable to make a still left turn right after the 2nd site visitors cone.”

For the reason that the vehicle’s recognition of its environment is clear to travellers, they are able to interpret and comprehend the actions being taken by the autonomous automobile. This sort of understanding has been demonstrated to create a bigger level of have confidence in and perceived safety.

We envision this new pattern of conversation involving men and women and their machines as enabling a far more natural—and far more human—way of handling automation. Without a doubt, it has been argued that context-dependent dialogues are a cornerstone of human-laptop conversation.

Mitsubishi’s scene-aware interactive program labels objects of fascination and locates them on a GPS map.Mitsubishi Electric powered Investigation Laboratories

Vehicles will before long come equipped with language-based warning devices that warn drivers to pedestrians and cyclists as well as inanimate obstacles on the road. Three to five yrs from now, this functionality will advance to route advice based mostly on landmarks and, in the long run, to scene-mindful virtual assistants that have interaction drivers and travellers in conversations about encompassing spots and events. This kind of dialogues could possibly reference Yelp evaluations of nearby places to eat or engage in travelogue-fashion storytelling, say, when driving through fascinating or historic regions.

Truck motorists, far too, can get help navigating an unfamiliar distribution heart or get some hitching support. Utilized in other domains, cell robots could support weary vacationers with their luggage and manual them to their rooms, or cleanse up a spill in aisle 9, and human operators could supply significant-amount steering to supply drones as they tactic a drop-off site.

This technological innovation also reaches past the difficulty of mobility. Healthcare virtual assistants might detect the feasible onset of a stroke or an elevated coronary heart charge, converse with a person to confirm no matter if there is without a doubt a dilemma, relay a message to medical professionals to search for assistance, and if the unexpected emergency is actual, alert initial responders. House appliances could foresee a user’s intent, say, by turning down an air conditioner when the consumer leaves the household. These kinds of capabilities would constitute a advantage for the standard man or woman, but they would be a match-changer for people with disabilities.

Purely natural-voice processing for device-to-human communications has come a extensive way. Reaching the style of fluid interactions in between robots and humans as portrayed on Television set or in flicks may possibly even now be some distance off. But now, it’s at minimum seen on the horizon.

More Stories

Are You Aware of the Latest Tech News?

Top 7 Questions To Ask Your Computer Repair Service Provider

How To Fix Msxml3 DLL Errors On Windows